Timothy Asp, Caleb Carlton. Association-Rule-Mining-Hadoop-Python. Input Format: python apriori. See full list on github. It is intended to identify strong rules discovered in databases using some measures of interestingness.

We used confidence as a measure of interestingness. We implemented support counting using hash trees. For the sake of comparison, we have left in the code for the brute force method commented.

Support counting using hash tree- 5. Please feel free to uncomment it and try it out. A mapping was created from the unique items in the dataset to integers so that each item corresponded to a unique integer. A reverse mapping was created from the integers to the items, so that the item names could be written in the final output file. GitHub is where people build software. Rule generation is a common task in the mining of frequent patterns.

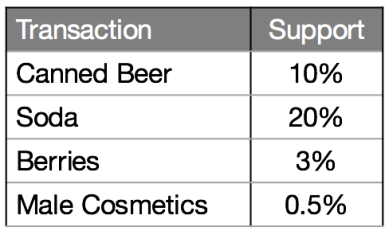

To evaluate the interest of such an association rule, different metrics have been developed. Tan, Steinbach, Kumar. Introduction to Data Mining. Pearson New International Edition. Mining associations between sets of items in large databases.

Dynamic itemset counting and implication rules for market basket data Piatetsky-Shapiro, G. Discovery, analysis, and presentation of strong rules. Sergey Brin, Rajeev Motwani, Jeffrey D. Ullman, and Shalom Turk. Currently implemented measures are confidence and lift. If you are interested in rules according to a different metric of interest, you can simply adjust the metric and min_thresholdarguments.

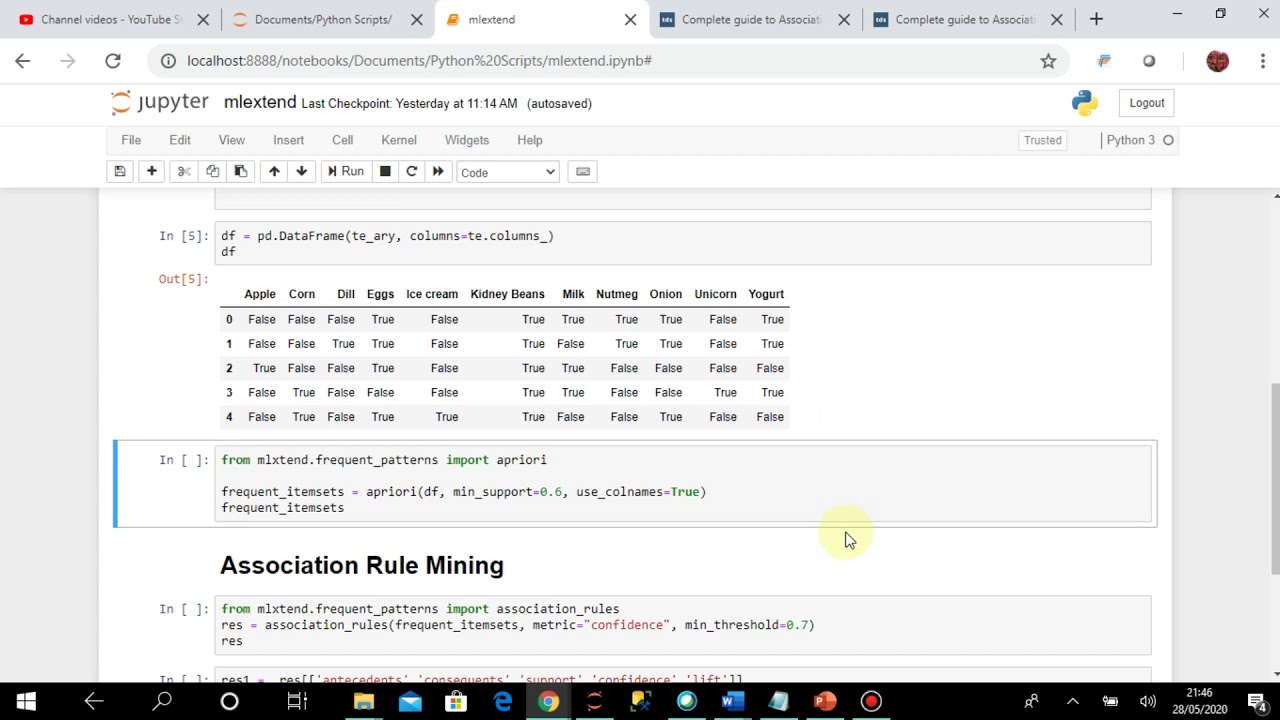

Pandas DataFramesmake it easy to filter the further. Since frozensets are sets, the item. Metric to evaluate if a rule is of interest. Minimal threshold for the evaluation metric,via the metricparameter,to decide whether a candidate rule is of interest. False)Only computes the rule support and fills the othermetric columns with NaNs.

This is useful if:a) the input DataFrame is incomplete, e. Different statistical algorithms have been developed to implement association rule mining , and Apriori is one such algorithm. In this article we will study the theory behind the Apriori algorithm and will later implement Apriori algorithm in Python. For large sets of data, there can be hundreds of items in hundreds of thousands transactions. For instance, Lift can be calculated for item and item item and item item and item and then item and item item and item and then combinations of items e. As you can see from the above example, this process can be extrem.

Enough of theory, now is the time to see the Apriori algorithm in action. Another interesting point is that we do not need to write the script to calculate support, co. They are easy to implement and have high explain-ability.

NBMiner: Mining NB-frequent itemsets and NB-precise rules. OPUS Miner algorithm for filtered top-k association discovery. RKEEL: Interface to KEEL’s association rule mining algorithm. RSarules: Mining algorithm which randomly samples association rules with one pre-chosen item as the consequent from a transaction dataset. Apriori function to extract frequent itemsets for association rule mining.

Python has many libraries for apriori implementation. Use this to read data directly from github. For example, understanding customer buying habits. By finding correlations and associations between different items that customers place in their ‘shopping basket,’ recurring patterns can be derived.

And the cofidence which is defined for an association rule like diapers-wine. Has anyone used (and liked) any good frequent sequence mining packages in Python other than the FPM in MLLib? I am looking for a stable package, preferable stilled maintained by people.

There are a couple of terms used in association analysis that are important to understand. The confidence for this.