The output of the apriori algorithm is the generation of association rules. This can be done by using some measures called support, confidence and lift. The most prominent practical application of the algorithm is to recommend products based on the products already present in the user’s cart.

It is based on the concept that a subset of a frequent itemset must also be a frequent itemset. Frequent Itemset is an. The apriori algorithm has been designed to operate on databases containing transactions, such as purchases by customers of a store. An itemset is considered as frequent if it meets a user-specified support threshold. See full list on stackabuse.

For large sets of data, there can be hundreds of items in hundreds of thousands transactions. For instance, Lift can be calculated for item and item item and item item and item and then item and item item and item and then combinations of items e. As you can see from the above example, this process can be extrem. Another interesting point is that we do not need to write the script to calculate support, co. They are easy to implement and have high explain-ability.

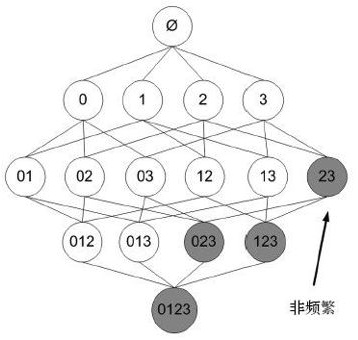

If you insist on your algorithm without considering the performance, you can do it youself. The rule turned around says that if an itemset is infrequent, then its supersets are also infrequent. The first 1-Item sets are found by gathering the count of each item in the set. It is an iterative approach to discover the most frequent itemsets. The classical example is a database containing purchases from a supermarket.

With the help of these association rule, it determines how strongly or how weakly two objects are connected. Experimentation with different values of confidence and support values. All subsets of a frequent itemset must be frequent.

It proceeds by identifying the frequent individual items in the database and extending them to larger and larger item sets as long as those itemsets appear sufficiently often in the database. If an itemset is infrequent, all its supersets will be infrequent. Below are the apriori algorithm steps: Scan the transaction data base to get the support ‘S’ each 1-itemset, compare ‘S’ with min_sup, and get a support of 1- itemsets , Use join to generate a set of candidate k-item set. Apriori Algorithm in Machine Learning. Use apriori property to prune the unfrequented k- item sets from this set.

This algorithm uses two steps “join” and “prune” to reduce the search space. It searches for a series of frequent sets of items in the datasets. It builds on associations and correlations between the itemsets. It is the algorithm behind “You may also like” where you commonly saw in recommendation platforms. DataFrame the encoded format.

The algorithm uses a “bottom-up” approach, where frequent subsets are extended one item at once (candidate generation) and groups of candidates are tested against. These itemsets are called frequent itemsets. Create a database of transactions each containingsome of these items. The information can bestored in a file, or a DBMS (e.g.

ORACLE). Also, using combinations() like this is not optimal. Parameters: max_num_products ( int) – The maximum number of unique items or products that may be present in the transactions. An array containing the transaction data.

In general, the number of passes made is equal to the length of the longest rule found. It generates strong association rules from frequent itemsets by using prior knowledge of itemset properties. It is the most used algorithm in today’s world of machine learning and artificial intelligence.

Repeat these two steps k times, where k is the number of items in the last iteration you get frequent items sets containing k items. First, minimum support is applied to find all frequent itemsets in a database. Secon these frequent itemsets and the minimum confidence constraint are used to form rules.