What is lift in association rule mining? It proceeds by identifying the frequent individual items in the database and extending them to larger and larger item sets as long as those item sets appear sufficiently often in the database. Other articles from geeksforgeeks. In today’s worl the goal of any organization is to increase revenue.

Can this be done by pitching just one product at a time to the customer? The answer is a clear no.

Hence, organizations began mining data related to frequently bought items. Market Basket Analysisis one of the key techniques used by large retailers to uncover associations between items. They try to find out associations between different items and products that can be sold together, which gives assisting in right product placement. Typically, it figures out what products are being bought together and organizations can place products in a similar manner. Let’s understand this better with an example: People who buy Bread usually buy Butter too.

So if customers buy bread and butter and see a discount or an offer on eggs, they will be encouraged to spend more a. See full list on edureka. Suppose item A is being bought by the customer, then the chances of item B being picked by the customer too under the same Transaction IDis found out. But here comes a constraint.

There are ways to measure association: 1. Lift Support:It gives the fraction of transactions which contains item A and B. Basically Support tells us about the frequently bought items or the combination of items bought frequently. So with this, we can filter out. Frequent Itemset is an itemset whose support value is greater than a threshold value(support).

Let’s say we have the following data of a store. Iteration 1:Let’s assume the support value is and create the item sets of the size of and calculate their support values. As you can see here, item has a support value of which is less than the min support value. We have the final Table F1. Iteration 2:Next we will create itemsets of size and calculate their support values.

All the combinations of items set in Fare used in this iteration. Itemsets having Support less than are eliminated again. Pruning:We are going to d. We will be using the following online transactional data of a retail store for generating association rules.

Step 1:First, you need to get your pandas and MLxtend libraries imported and read the data: Step 2:In this step, we will be doing: 1. Data clean up which includes removing spaces from some of the descriptions 2. Drop the rows that don’t have invoice numbers and remove the credit transactions Step 3: After the clean-up, we need to consolidate the items into transaction per row with each product For the sake of keeping the data set small, we are only looking at sales for France. Generate frequent itemsets that have a support value of at least (this number is chosen so that you can get close enough) 2. Generate the rules with their corresponding support, confidence and lift.

Itearticle in the basket. Itemset: a group of items purchased together in a single transaction. Closed Itemset: support of all parents are not equal to the support of the itemset. Maximal Itemset: all parents of that itemset must be infrequent. A set of items together is called an itemset.

If any itemset has k-items it is called a k-itemset. An itemset consists of two or more items. Thus frequent itemset mining is a data mining technique to identify the items that often occur together.

For Example, Bread and butter, Laptop and Antivirus software, etc. Frequent itemset or pattern mining is broadly used because of its wide applications in mining association rules, correlations and graph patterns constraint that is based on frequent patterns, sequential patterns, and many other data mining tasks. Many methods are available for improving the efficiency of the algorithm. Hash-Based Technique:This method uses a hash-based structure called a hash table for generating the k-itemsets and its corresponding count.

It uses a hash function for generating the table. Transaction Reduction:This method reduces the number of transactions scanning in iterations. Partitioning:This method requires only two database scans to mine the frequent itemsets. It says that for any itemset to be potentially frequent in the database, it should be frequent in at least one of the partitions of the database.

Sampling:This method picks a random sample S from Database D and then searches for frequent itemset in S. It may be possible to lose a global frequent itemset. This can be reduced by lowering the min_sup. Dynamic Itemset Counting:This technique can add new candidate itemsets at any marked start point of the datab. In Education Field:Extracting association rules in data mining of admitted students through characteristics and specialties.

In Forestry:Analysis of probability and intensity of forest fire with the forest fire data. It reduces the size of the itemsets in the database considerably providing a good performance. Thus, data mining helps consumers and industries better in the decision-making process. Check out our upcoming tutorial to know more about the Frequent Pattern Growth Algorithm ! By Annalyn Ng , Ministry of Defence of Singapore. Short stories or tales always help us in understanding a concept better but this is a true story, Wal-Mart’s beer diaper parable.

A sales person from Wal-Mart tried to increase the sales of the store by bundling the products together and giving discounts on them. He bundled bread and jam which made it easy for a customer to find them together. Furthermore, customers could buy them together because of the discount. To find some more opportunities and more such products that can be tied together. With the quick growth in e-commerce applications, there is an accumulation vast quantity of data in months not in years.

Data Mining, also known as Knowledge Discovery in Databases(KDD), to find anomalies, correlations, patterns, and trends to predict outcomes. Association rule learning is a prominent and a well-explored method for determining relations among variables in large databases. Let us take a look at the formal definition of the problem of association rules given by Rakesh Agrawal, the President and Founder of the Data Insights Laboratories. Let be a set of n attributes called items and be the set of transactions.

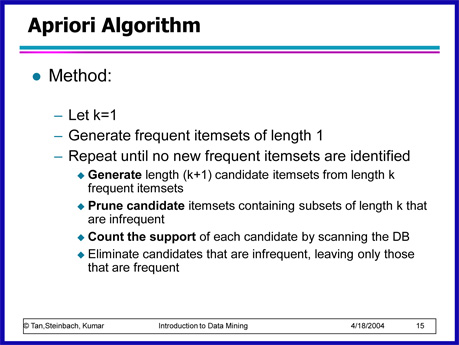

Every transaction, in has a unique transaction I and it consists of a subset of itemsets in. All subsets of a frequent itemset must be frequent 2. Similarly, for any infrequent itemset, all its supersets must be infrequent tooLet us now look at the intuitive explanation of the algorithm with the help of the example we used above. It is called database. Before beginning the process, let us set the support threshold to , i. It can be used on large itemsets. Sometimes, it may need to find a large number of candidate rules which can be computationally expensive.

Calculating support is also expensive because it has to go through the entire database. Through this article, we have seen how data mining is helping us make decisions that are advantageous for both customers and industries. This tutorial aims to make the reader fa. It has got this odd name because it uses ‘prior’ knowledge of frequent itemset properties. We shall now explore the apriori algorithm implementation in detail.

With the help of these association rule, it determines how strongly or how weakly two objects are connected. Now, what is an association rule mining? Association rule mining is a technique to identify the frequent patterns and the correlation between the items present in a dataset. In supervised learning, the algorithm works with a basic example set. It runs the algorithm again and again with different weights on certain factors.

APRIORI ALGORITHM BY International School of Engineering We Are Applied Engineering Disclaimer: Some of the Images and content have been taken from multiple online sources and this presentation is intended only for knowledge sharing but not for any commercial business intention 2. All subsets of a frequent itemset must be frequent. If an itemset is infrequent, all its supersets will be infrequent. Put simply, the apriori principle states that if an itemset is infrequent, then all its subsets must also be infrequent.

Apriori Algorithm Learning Types.